Saw this reported on MSNBC this morning. From the CDC's NCHS (pdf):

Studies suggest that the presence of multiple chronic conditions (MCC) adds a layer of complexity to disease management; recently the U.S. Department of Health and Human Services established a strategic framework for improving the health of this population. This report presents estimates of the population aged 45 and over with two or more of nine self-reported chronic conditions, using a definition of MCC that was consistent in the National Health Interview Survey (NHIS) over the recent 10-year period: hypertension, heart disease, diabetes, cancer, stroke, chronic bronchitis, emphysema, current asthma, and kidney disease. Examining trends in the prevalence of MCC informs policy on chronic disease management and prevention, and helps to predict future health care needs and use for Medicare and other payers...

Summary

These findings demonstrate the widespread rise in the prevalence of two or more of nine selected chronic conditions over a 10-year period. The most common combinations of chronic conditions––hypertension and diabetes, hypertension and heart disease, and hypertension and cancer––increased during this time. Between 1999–2000 and 2009–2010, adults aged 45–64 with two or more chronic conditions had increasing difficulty obtaining needed medical care and prescription drugs because of cost.

Growth in the prevalence of MCC was driven primarily by increases in three of the nine individual conditions. During this 10-year period, prevalence of hypertension increased from 35% to 41%, diabetes from 10% to 15%, and cancer from 9% to 11%, among those aged 45 and over. A limitation of this report is that it includes only respondent-reported information of a physician diagnosis; thus, estimates may be understated because they do not include undiagnosed chronic conditions.

Increases in the prevalence of MCC may be due to a rise in new cases (incidence) or longer duration with chronic conditions. The prevalence of obesity—a risk factor for certain types of heart disease and cancer, hypertension, stroke, and diabetes—increased in the United States over the past 30 years, but has leveled off in recent years (7–9). Advances in medical treatments and drugs are contributing to increased survival for persons with some chronic conditions. During this 10-year period, death rates for heart disease, cancer, and stroke declined. In recent years, the percentage of Americans who were aware of their hypertension, and the use of hypertension medications, has increased.

The rising prevalence of MCC has implications for the financing and delivery of health care. Persons with MCC are more likely to be hospitalized, fill more prescriptions and have higher annual prescription drug costs, and have more physician visits. Out-of-pocket spending is higher for persons with multiple chronic conditions and has increased in recent years.

Chronic disease, and combinations of chronic diseases, affects individuals to varying degrees and may impact an individual’s life in different ways. The increasing prevalence of MCC presents a complex challenge to the U.S. health care system, both in terms of quality of life and expenditures for an aging population.Current and incipient Old Coots (like me), with our accrued Co-morbid Chronics, we're gonna hammer the system, in terms of UTIL.

One thing that will help:

The progress of modern applied science has been defined by a series of outrageously ambitious projects, from the effort to build the first atomic bomb to the race to sequence the human genome.Well, yeah. Lawrence and Lincoln Weeds' "Medicine in Denial" 101.

For scientists and engineers today, perhaps the greatest challenge is the structure and assembly of a unified health database, a "big data" project that would collect in one searchable repository all of the parameters that measure or could conceivably reflect human well-being. This database would be "coherent," meaning that the association between individuals and their data is preserved and maintained. A recent Institute of Medicine (IOM) report described the goal as a "Knowledge Network of Disease," a "unifying framework within which basic biology, clinical research, and patient care could co-evolve."

The information contained in this database - expected to get denser and richer over time -- would encompass every conceivable domain, covering patients (DNA, microbiome, demographics, clinical history, treatments including therapies prescribed and estimated adherence, lab tests including molecular pathology and biomarkers, info from mobile devices, even app use), providers (prescribing patterns, treatment recommendations, referral patterns, influence maps, resource utilization), medical product companies (clinical trial data), payors (claims data), diagnostics companies, electronic medical record companies, academic researchers, citizen scientists, quantified selfers, patient communities - and this just starts to scratch the surface.

The underlying assumption here is that this information, appropriately analyzed, should improve both our potential and attained health, pointing us towards future medical insights while enabling us to immediately improve care by optimizing the use of existing resources and technologies...

A culture of denial subverts the health care system from its foundation. The foundation—the basis for deciding what care each patient individually needs— is connecting patient data to medical knowledge. That foundation, and the processes of care resting upon it, are built by the fallible minds of physicians. A new, secure foundation requires two elements external to the mind: electronic information tools and standards of care for managing clinical information.

Electronic information tools are now widely discussed, but the tools depend on standards of care that are still widely ignored. The necessary standards for managing clinical information are analogous to accounting standards for managing financial information. If businesses were permitted to operate without accounting standards, the entire economy would be crippled. That is the condition in which the $21⁄2 trillion U.S. health care system finds itself—crippled by lack of standards of care for managing clinical information. The system persists in a state of denial about the disorder that our own minds create, and that the missing standards of care would expose.

This pervasive disorder begins at the system’s foundation. Contrary to what the public is asked to believe, physicians are not educated to connect patient data with medical knowledge safely and effectively. Rather than building that secure foundation for decisions, physicians are educated to do the opposite—to rely on personal knowledge and judgment—in denial of the need for external standards and tools. Medical decision making thus lacks the order, transparency and power that enforcing external standards and tools would bring about...

Medical practice is thus trapped in a subjective realm. Unlike scientific practitioners, medical practitioners do not operate in an objective realm, where the contents of thought and knowledge exist independently of the individual mind, a realm where knowledge can be reliably transmitted and applied, where new knowledge can be rapidly translated into practice, where all knowledge can be tested against patient realities. Isolated from this objective realm, the mind becomes a negative force, a cause of confusion and disorder. Physicians are not equipped to fulfill their immense responsibility safely and effectively. Other practitioners are not equipped to share that responsibility with physicians. Patients are not equipped to work effectively with multiple practitioners, nor to assume the ultimate burden of decision making over their own bodies and minds. Third parties are not equipped to create order out of this chaos. Practitioners and patients are not accountable for their own behaviors, while third parties are left free to manipulate disorder for their own advantage.

In short, essential standards of care, information tools and feedback mechanisms are missing from the marketplace. These missing elements are in large part already developed (see parts IV and VI below). Yet, the underlying medical culture does not even recognize their absence. This does not prevent some practitioners from becoming virtuoso performers in narrow specialties or skills. But their virtuosity is personal, not systemic, and limited, not comprehensive. Missing is a total system for enforcing high quality care by all practitioners for all patients...

Medical decision making requires sorting through a vast body of available information to identify the limited information actually needed for each patient. That individually-relevant information must be applied reliably and efficiently, without unnecessary trial and error. This requires highly organized analysis. Educated guesswork is not good enough.Were I The Boss of Health Care, this book would be Med School Year One Semester One Required Reading. It's that good.

Organized analysis can begin with a simple process of association. In the diagnostic context, this means linking a symptom with associated diagnoses, linking each one of those diagnostic possibilities with readily observable, inexpensive findings associated with each diagnosis, checking all of those findings in the patient, and comparing actual, positive findings on the patient with the array of diagnostic possibilities and associated findings. The output of this process reveals how well each of the diagnostic possibilities matches the patient. (Such a process of association should similarly form the basis for selecting among different treatment possibilities, as we shall see.)

This process of association is simple in two senses. First, the data items are quick, inexpensive, non-invasive findings from the patient history, physical examination and basic laboratory tests. Second, no clinical judgment is required to establish the simple associations between the findings and the diagnoses. The associations (distilled from the medical literature) are mere linkages that computer software can instantly arrange and rearrange as needed.

Physicians are not trained to begin diagnosis using external tools for this simple associative process. Instead, they employ clinical judgment from the very outset of care. Somehow, at each encounter with a new patient, physicians must rapidly select the right data, and then analyze that data correctly in light of vast medical knowledge. They believe that their judgment organizes data collection and analysis in a scientifically sophisticated manner (referred to as “differential diagnosis” in the diagnostic context). This is believed superior to mere information processing, because it applies scientific knowledge and, when successful, minimizes unnecessary data collection. An example is the correspondent (discussed in the preceding section) who analyzed very limited initial data in the case study and “came to just one possibility—Addison’s disease.” Moreover, clinical judgment involves observation and intuition based on personal interaction with the patient, informed by long experience with innumerable other patients. Physicians thus believe that clinical judgment involves much more than educated guesswork.Yet, it is a fantasy to think that clinical judgment enables physicians to analyze patient problems reliably and efficiently.

ANALYTICS WILL GET SIGNIFICANTLY EASIER

Check this out. I've written before about the promise of open source apps, including HIT and statistical apps.

OK, so, as I post this we're mostly all fixated on the 2012 Summer Olympics. Here's a little bit of open source data mining/modeling (R Language) I ran across today (I have succumbed to the enticement of Twitter, wherein I found the link to this).

This is all it took, 20 brief lines of code*:

library(XML)The R script simply parses out the data from this web page,

library(drc)

url <- "http://www.databaseolympics.com/sport/sportevent.htm?enum=110&sp=ATH"

data <- readHTMLTable(readLines(url), which=2, header=TRUE)

golddata <- subset(data, Medal %in% "GOLD")

golddata$Year <- as.numeric(as.character(golddata$Year))

golddata$Result <- as.numeric(as.character(golddata$Result))

tail(golddata,10)

logistic <- drm(Result~Year, data=subset(golddata, Year>=1900), fct = L.4())

log.linear <- lm(log(Result)~Year, data=subset(golddata, Year>=1900))

years <- seq(1896,2012, 4)

predictions <- exp(predict(log.linear, newdata=data.frame(Year=years)))

plot(logistic, xlim=c(1896,2012),

ylim=c(9.5,12),

xlab="Year", main="Olympic 100 metre",

ylab="Winning time for the 100m men final (s)")

points(golddata$Year, golddata$Result)

lines(years, predictions, col="red")

points(2012, predictions[length(years)], pch=19, col="red")

text(2012, 9.55, round(predictions[length(years)],2))

http://www.databaseolympics.com/sport/sportevent.htm?enum=110&sp=ATH

That's pretty compact. Nice nested command/function calls, etc. Original article here.

* Assumes a (free) R Language runtime interpreter environment install.UPDATE, AUG 5TH

Winning time in the 100 metres was 9.63. Usain Bolt of Jamaica.

I was just a bit curious, so I tabulated only the 20 years' of winning times prior to this year's event.

Fitting a basic polynomial (recall that hated old high school algebra?) got me to 9.64 seconds for 2012 , putatively 5x more "accurate" than the R Language model.

Just lucky there. I seriously doubt it'll be anywhere near polynomial-proportionally faster in 2016. In fact, the first-order linear trend (red dash line) might well prove more "predictive" given the visually evident inter-Olympics variance.

___

PALANTIR

Palantir is inspired by a simple idea—that with the right technology and enough data, people can still solve hard problems and change the world for the better. For organizations addressing many of today’s most critical challenges, the necessary information is already out there, waiting to be understood.

Nice. Still researching these people. One linked enterprise I found:

I signed up for an account immediately. I've already used Data.gov, but this looks like it will simplify doing (some) analytics.AnalyzeThe.US allows anyone to use Palantir to explore vast amounts of data only recently released into the public domain, including key datasets from www.data.gov. Information about key individuals, organizations, and activities exists in many places, and conducting meaningful analysis first requires the ability to integrate data seamlessly and completely. AnalyzeThe.US brings critical knowledge together on a single stage, while providing rich analytical applications that enable anyone to develop an intuitive picture of the complex flow of resources, money, and influence that affect how our government functions. Ultimately, by allowing citizens to analyze our democracy, AnalyzeThe.US democratizes analysis.

I like their avowed commitment to "Civil Liberties / Privacy."

Palantir Technologies is a mission-driven company, and a core component of that mission is protecting our fundamental rights to privacy and civil liberties. Since its inception, Palantir has invested its intellectual and financial capital in engineering technology that can be used to solve the world’s hardest problems while simultaneously protecting individual liberty. Robust privacy and civil liberties protections are essential to building public confidence in the management of data, and thus are an essential part of any information system that uses Palantir software.Yeah, we'll see. Talk is cheap. I've been a bit of Crank on the whole "privacy" thing for a long time (ironic to an extent, given my all-over-the-internet presence).

A core engineering commitment

Some argue that society must “balance” freedom and safety, and that in order to better protect ourselves from those who would do us harm, we have to give up some of our liberties. We believe that this is a false choice in many areas. Particularly in the world of data analysis, liberty does not have to be sacrificed to enhance security. Palantir is constantly looking for ways to protect privacy and individual liberty through its technology while enabling the powerful analysis necessary to generate the actionable intelligence that our law enforcement and intelligence agencies need to fulfill their missions.

We believe that privacy and civil liberties-protective capabilities should be “baked in” to technology from the start rather than grafted onto it later as an afterthought. By seamlessly integrating these features into our software, we reduce user friction that might otherwise create incentives to try to work around these protections. With the right engineering, the technologies that protect against data misuse and abuse can be the same technologies that enable powerful data analysis.

___

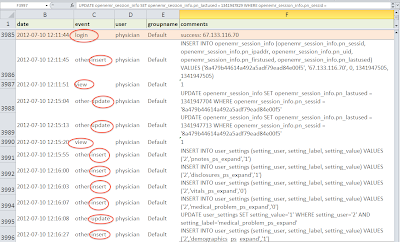

Can't wait to starting drilling down into this (below, installed on my iMac):

IN OTHER NEWS

Thomas E Sullivan, MD Chief Privacy Officer, Chief Strategic Officer DrFirst.Com, Inc. testifies before ONC

...We have been talking about “administrative simplification” for many years, even prior to the passage of HIPAA. Although there has been progress, much remains to accomplish.

I believe physicians and selected other clinical providers are among the most highly credentialed and authenticated professionals in our society. There are very many examples of redundancy, unnecessary delays and excess expenses in carrying out these programs. Physicians are typically licensed in one or more states, separately credentialed by many health plans and a few hospitals and also undergo certain privileging criteria within each hospital or otherwise licensed facility. The system is getting more complex daily and cries out for a streamlined and collaborative approach which I believe is part and parcel of the vision maintained by an NSTIC.

Cost effective care and the elimination of redundancy need to be hallmarks of 21st century medicine. Put in another way, although the first rule of medicine traditionally recommended for physicians is:

“Primum Non Nocere”.....First, Do No Harm

I would add Dr. Sullivan’s second rule:

“Secundo, Propera Ne Me”....Second, Don’t Slow Me Down

Indeed. Can you say "workflow"?

___

AUGUST 2nd UPDATE: NEW BLOG

Welcome to eCW Talks, a blog initiated by eClinicalWorks to keep you informed and up-to-date on the happenings here, in our extended community and the electronic health records industry in general. We have always committed ourselves to staying ahead of trends and this will be one vehicle for that. Posts will come from me and various additional experts that can provide a new voice and unique glimpse into the topic covered by that entry. In this ever evolving market, it is important to us that we are a trusted resource, acting as transparent as possible.This should be interesting.

___

August 4th HIT / workforce update:Nursing, Engineering Invention Launches; Improves Nurses WorldwideCross-posted with permission

This UTK alum is pretty proud.A joint endeavor between the colleges of nursing and engineering has been launched as a new product to help build a better workforce of health professionals worldwide.

Called Lippincott’s DocuCare EHR, the new product integrates electronic health records (EHR) commonly used in hospitals and medical offices into a simulated learning tool for students. Proficiency using EHR is paramount for nursing students as the Obama administration has challenged health care providers nationwide to transition to this new technology.

The device was developed by Tami Wyatt, associate professor of nursing, and her graduate student Matt Bell (now an alumnus), along with Xueping Li, an associate professor in industrial and information engineering, and his graduate student, Yo Indranoi. Lippincott Williams & Wilkins (LWW), a leading international publisher for health care professionals and students, purchased the invention in 2010. It is now being marketed worldwide.

“Today’s new graduate nurses must be adept in using this technology, including electronic health records, to comply with accreditation standards,” said Wyatt. “Relying on the limited exposure to EHR technology that nursing students get during their clinical experiences is just not enough.”

By integrating EHR technology into multiple courses across the nursing curricula, the product provides continuity in learning, and students begin relying on EHRs as tools to gather data and anticipate patient care.

The product includes more than seventy pre-populated simulated patient records and cases. Each case includes links to LWW textbooks, giving students access to diagnosis information, procedure descriptions and videos, and other evidence-based content that is used in over 1,200 hospitals nationwide.

Instructors can bring classroom case studies to life by creating simulated patient records, building assignments, and evaluating student documentation performance. The program is designed to better prepare students for practice, in a fully realistic, yet risk-free, simulated environment. LWW will train faculty on how to use this new tool and integrate it into their curriculum.

As part of an ongoing partnership with Laerdal Medical—the top distributor of simulated mannequins for nursing education—the tool includes a variety of Laerdal’s patient scenarios and simulations.

The development of the tool, which began in 2007, has been a collaboration across UT’s campus. Law students offered legal advice for the startup company that marketed Lippincott DocuCare before it was purchased by LWW. Business students helped design a business plan. The UT Research Foundation copyrighted the technology.

Wyatt and Li are co-directors of the Health Information Technology and Simulation Laboratory (HITS Lab), an organized research unit at UT. The overall goal of the HITS Lab is to advance the science of health information technology and examine ways HITS enhances consumer health and professional health education.

To learn more about DocuCare, including purchasing information, visit the website.CONTACT

Whitney Heins (865-974-5460, wheins@utk.edu)

IN OTHER NEWS:

Atigeo Launches Big Data Semantic Search Tool Using NIH PubMedYeah, "semantic search." Seems like that would lend itself to the analytics problems inherent in "semi-structured / narrative" EMR notes. Check out the interesting Atigeo xPatterns YouTube below.

Atigeo, a big data analytics company, has launched PubMed Explorer, an application that allows medical researchers to search the federal database to present results of medical studies based on context in a graphical display.

PubMed is a National Institutes of Health database that provides access to more than 400,000 medical research documents.

PubMed Explorer uses Atigeo's xPatterns big data semantic search platform in the cloud to fine-tune search results so the database can learn the user's search patterns. PubMed's linear search capabilities slow medical research, according to Atigeo.

xPatterns can apply analytics to PubMed's unstructured data set to deliver relevant results by generating domain concepts, Atigeo reported.

Semantic search is a form of technology that can deliver results based on the context of a search term...

___

gBench?

Dr. Rowley (Practice Fusion co-founder) sent me a LinkedIn invite to sign up for this. Nice of him. So, I signed up. We'll see what happens. Smells like CareerLadder.com Teen Spirit to a degree.

- Whether you are already a Healthcare IT Consultant, looking to get into the business, or have a need for Healthcare IT resources, gBench simplifies the engagement process by giving consultants and recruiters the information they need to determine where their best time is spent.

- Consultants can build a robust profile in minutes utilizing our standardized naming convention for skill sets, detailed contract preferences, and the ability to publish your availability.

- Employers search the network according to their acceptable engagement terms and timelines with the option to send opportunities to qualified consultants.

- Eliminate the fluff...and get to the point! Join gBench and get in the game!

LATEST MU INCENTIVE $$$ UPDATE

$6.174 billion paid out. PDF link to the report here.

___

More to come...