___

REC "PPCP" RECRUITING TO DATE

No one at ONC really wants to talk about it for the public record these days (nor even much privately). One number that wafted my way recently was ~17,000 sign-ups nationally to date. "PPCP" means "Priority Primary Care Providers," who can get federally subsidized REC soup-to-nuts consulting/facilitation services during the first two years of the HITECH program. Eight months in, then, this means that REC "enrollment" (which is optional, recall) is running only about 50% of target-to-date in the aggregate, in light of the national goal to bring 100,000 primary care providers "meaningfully" on board within two years.

REC provider recruitment barriers persist, obviously - to wit,

- EHR cost and concomitant ROI dubiety (given productivity loss and "everyone-benefits-except-me" concerns);

- Skepticism that the Meaningful Use reimbursement money will actually be forthcoming (particularly in the wake of the 2010 mid-term election outcomes -- with Republicans now loudly vowing to de-fund everything associated with "ObamaCare");

- Specific physician anxiety and anger regarding the yet-again pending draconian Medicare reimbursement cuts;

For example: "Unless Congress acts, physicians are just weeks away from taking a 23% cut in Medicare reimbursements mandated by the sustainable growth rate (SGR) formula. The cut is scheduled to go into effect on Dec. 1 and will be followed on Jan. 1 with an additional cut, bringing the total to 25%."

- Medicaid provider participation remains an acute concern. Some states (including my own) are considering dropping out of the Medicaid program, in light of rapidly increasing patient enrollment (owing to our record high and seemingly intractable unemployment rate) concomitant with reductions in provider reimbursements during a difficult time of state budget deficits. Even should this not happen, their participation in the HITECH Meaningful Use program is voluntary, and not fully federally funded (states have to submit a "plan" to the feds, and come up with an unfunded 10% of "reasonable administrative expenses");

- More broadly, antipathy toward anything related to the motives of "the government," one being the allegation that this is all about the feds wanting to be able to easily gather all of our medical information in order to dictate [1] what treatments we can obtain (and how doctors must perform them) and [2] what we can eat (Michelle Obama's menacing BMI Violator Celery Stick Police);

- Vendor, VAR, and commercial consultant assertions that "you don't need REC help, we'll get you to MU" (owing to that many of us have to charge subscription, hourly, or back-end fees, given that the HITECH subsidy is not 100% and is incrementally "milestone" based);

- General resistance-to-change inertia. As my mentor Dr. Brent James was fond of saying, "the only person who enjoys change is a baby with a wet diaper."

"...Noting that the private sector has not been able to develop and adopt methods for significantly improving health outcomes in the U.S., [Paul Tang, MD] called recent federal legislation "transformative" by providing the goals, timetables, and financial incentives necessary to achieve desired change. "All the necessary forces are aligned," he said.Notwithstanding the truth of the foregoing assertions, I detect increasing wafts of circle-the-wagons angst, given that The Tea Party Cometh soon to the halls of Congress. While some feel that HITECH and the RECs will be safe from the 2011 legislative

The Affordable Care Act (ACA), and the American Recovery and Reinvestment Act (ARRA) of 2009 share many of the same requirements for achieving and documenting cost and quality of care criteria, said Tang, and both will depend on the "meaningful use" of certified EHRs targeted by ARRA for widespread use by 2014. Meaningful use requirements address key health goals, including improved quality, safety and efficiency; engaging patients and their families; improving care coordination; improving population and public health; and ensuring privacy and security protections.

Tang was a keynote speaker at the MedeAnalytics Clinical Leadership Summit held Nov. 11-12 at the Fairmont Hotel in San Francisco."

HIT (and REC assistance) continues to be a tough sell, at least from my perspective in Nevada (but, in fairness, my state is the fiscal basket case of the nation; highest unemployment rate, highest mortgage foreclosure rate; 80% of non-foreclosed residential mortgages "under water," largest state budget deficit in the nation, proportionally, etc. A swell place to live these days).

I had a bit of irascible Photoshop commentary fun with this entire "recruitment" notion early on.

Whatever. They ostensibly re-hired me for the Meaningful Use Adoption Support "technical assistance"/QI effort, upon which I really want to focus my energy in lieu of all this "sales rep" stuff.

Whatever. They ostensibly re-hired me for the Meaningful Use Adoption Support "technical assistance"/QI effort, upon which I really want to focus my energy in lieu of all this "sales rep" stuff.___

OUR CORE ADOPTION SUPPORT M.O.

The minimal essence I fought hard internally to establish. It was not universally loved within my REC team, having been deemed in some incumbent quarters too "old school / industrial." Right. Click the image to enlarge.

Rather simple, conceptually (props to my QA Guru wife for giving me her SOP template to use). My argument (which prevailed in the end) was that, should we manage nothing else beyond getting our client providers to Stage One Meaningful Use compliance, we would have met both our ONC and client-contractual obligations. Simply a matter of exigent priority, i.e.,

- Document and visualize the workflow components for each Meaningful Use criterion, as dictated by each EHR platform;

- Write it all up in a "Standard Operating Procedure" (SOP*) for client distribution;

- Include in the SOPs the sets of "screen shots" (where relevant) that can navigate any authorized users to the target MU "money fields" (where the data have to be consistently recorded).

As I have stated before, I would favor laying this sort of thing off on the EHR vendors (given that they know their products -- specifically the MU documentation navigation paths within their respective systems, and that such are -- implicitly, anyway -- part of ONC Certification), leaving RECs the time to concentrate on actual "process improvement" via which to make the entire effort a bottom line winner for the providers.

In fact, were I setting ONC EHR Cert policy, I would have made such explicit instructions part of certification requirements. Perhaps once "permanent certification Registrar" requirements are set forth, such will be the case. I will certainly lobby for it. But, I won't be holding my breath.

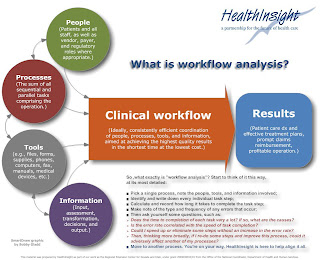

EHR WORKFLOW,

TASK TIME-TO-COMPLETION CONCERNS

You might recall my earlier blog post reflections regarding how Meaningful Use compliance could easily consume so much additional FTE labor cost as to negate the incentive reimbursements. "Use" case in point, from a study I found posted on our HITRC (again, click the image to enlarge).

I'm just too sorry: THIRTY FIVE seconds to document a CPOE lab order (in the VistA EHR)? OK, annualize that, extrapolating from a couple of dozen patients per day. It's not viable. As I've pointed out elsewhere on this blog, just adding an aggregate additional couple of minutes labor per chart documenting all the Meaningful Use criteria will serve to nullify the reimbursement payments. Hence my personal imperative of trying to inculcate "Lean" principles within my client clinics as I work with them in order to find ways to decrement the workflow FTE burden overall in support of effective HIT adoption.

___

RE: "LEAN HEALTH CARE" AN UNSOLICITED SHOUT-OUT

MY ONE-PAGE SUMMARY TAKE ON "WORKFLOW"

SOME THOUGHTS ON "LEAN TRANSFORMATION"

Returning for a moment to the excellent book "On The Mend."

"Lean transformation is all about Dr. Deming’s Plan Do Study Act (PDSA), otherwise known as the scientific method. There is no simple formula to copy and no quick path to success. Instead you must perform your own experiments— tailored to the mission and circumstances of your organization. And then you must honestly study the results and act on your findings, including sharing them with the healthcare community.Yeah, "PDSA." All the rage in progressive process QI circles for quite some time now (and, color me a believer, as I stated early on).

I would revise to acronym, however, to "SPDSA," i.e., "STUDY, Plan-Do-Study-Act." At the risk of belaboring a point that may be implicit in the minds of most people involved in this work (i.e, that initial quantitative baseline assessment "study" is reflexively included in the "Plan" phase if it's truly "scientific"), I never lose sight of the source, the late Dr. Deming himself:

"Statistical control. A stable process, one with no indication of a special cause of variation, is said to be, following Shewhart, in statistical control, or stable. It is a random process. Its behavior in the near future is predictable. Of course, some unforeseen jolt may come along and knock the process out of statistical control. A system that is in statistical control has a definable identity and a definable capability (see the section "Capability of the process," infra).

In the state of statistical control, all special causes so far detected have been removed. The remaining variation must be left to chance -- that is, to common causes -- unless a new special cause turns up and is removed. This does not mean do nothing in the state of statistical control; it means do not take action on the remaining ups and downs, as to do so would create additional variation and more trouble (see the section on overadjustment, below). The next step is to improve the process, with never-ending effort (Point 5 of the 14 points). Improvement of the process can be pushed effectively, once statistical is achieved and maintained (so stated by Joseph M. Juran many years ago)."

["Out of the Crisis," Ch 11, page 321]

In Deming's view, attempting to "improve" processes that are empirically unstable constitutes what he called "tampering," interventions that typically only serve to make matters worse.

A quick example from my own environmental lab "SPC" experience more than 20 years ago (before indoor plumbing, in IT terms). One of my projects (PDF) involved the development of a computerized system via which to track the accuracy, precision, and stability of the lab's numerous multi-channel radiation detector instruments.

QC personnel ran and recorded daily "sealed source" and "background" checks for every detector, along with QC specimens known as "matrix spikes," "D.I. water spikes," "matrix blanks," "matrix dupes," etc (we were also subject to "blind" QC samples submitted by clients and regulators, posing as production samples). My software calculated the requisite statistics every day (e.g., mean response, std deviation/"coefficient of variation," upper and lower "warning" and "control limits," and linear regression trend slope), updating them with each new data point (through the first 20 entries, that is). Statistical "outliers" would then be readily apparent, and any out-of-calibration "drift" would become apparent via the fitted "slope." In the foregoing example, the detector looks to be "stable," evincing a random daily variablity of about 1% of the average.

Below, same chart, annotated to call attention to a couple of early potential "mini-trends." Across the initial 20 or data entries, it appeared that the detector might be trending sharply down, and, shortly thereafter, after the "dive" had abated, there was a downward "run" of six consecutive entries (the red oval).

Given that our lab hewed to "forensic standards," owing to the fact that much of our work was destined for use as evidence in contamination/dose exposure litigation, we tended to be hyper-vigilant when it came to QC. Nonetheless, textbook "applied process control statistics" can't do your thinking for you. Should detector LB5100/TA/002 have been taken offline for intervention after the first 20 accrued data points? Or, in the wake of a subsequent negative "run" of six daily results? Obviously, our lab's Technical Director didn't think so, and the overall plot tended to back his sanguinity.** No SPC "outliers" and about a 1% or less daily average variation across nearly three months; what's not to love? (LOL, such was not always the case.)

** In this case, you bring to bear contextual expert judgment. Like an aircraft pilot cross-referencing her instrument panel under IFR conditions for accurate and safe flight absent the ability to see outside the cockpit, our lab manager would take into account not only the daily sealed-source detector disintegration "counts," but also the correlative results of routine bench-chem derived lab QC samples (the breadth of spikes and dupes inserted into the production stream). Morever, expert judgment also informs that, given the stochastic nature of radionuclide decay eminations (all of them half-life "decay-corrected" in our system btw), were you to re-run sealed sources multiple times per day (not practical), you would still see random intra-day variation.Those were fun times. And, I have tried to internalize all of the "lessons learned," foremost among them that, should you fail to discover (or you otherwise ignore) knowable "special causes" impacting a process and rectify them, your efforts at "improvement" way well be counterproductive.

Statistical estimates are tools. They don't properly replace domain knowledge and experience. (Moreover, just because Excel now enables you to effortlessly fit an "nth-degree" polynomial to a scatter of a handful of data points -- replete with a nicely displayed equation and impressive R-Squared stat -- doesn't mean you should.)

But, as I now begin to actually work with my small outpatient primary care clinics doing "workflow analysis and re-design," though, I know I'm not even going to get close to such a level of rigorous statistical process analysis. Most of our workflow assessments are necessarily bound to be mostly "qualitative" (and MU compliance driven, at least across the Stage One period).

I guess we'll just take our "wins" where we can get them. Still, I remain drawn to true PDSA "lean" process improvement principles and procedures, e.g., as set forth by leading organizations such as the Thedacare Center for Healthcare Value:

"The A3 method assures that the PDSA cycle is followed and the changes are monitored. The process steps can be documented in a variety of formats, but it typically includes the following elements, on a single piece of paper. A3 refers to the standardized paper size of 11” x 17”.___

- Title – Names the problem, issue, or topic

- Owner/Date – Identifies who owns the problem or issue and the date of the latest revision

- Background – Why is this important? What background information is important? What have we seen in gemba?

- Current Conditions – Show the current state using pictures, graphs, data, etc. What is the problem?

- Goals/Targets – What results do you expect? What are the key measures? (quality, cost, morale, delivery, access, etc.)

- Analysis – What is the root cause(s) of the problem? If you work to eliminate this root cause, will you make progress toward solving the problem?

- Countermeasures – What proposed actions do you intend to take to reach the target condition? How will you show how your countermeasure will address the root causes of the problem? What is the new standard process?

- Implementation – What needs to be done? Who will do it? By when? What are the performance indicators to show progress? How will people be trained in the new process?

- Follow Up – What issues can be anticipated? How will you capture and share learning? How will you continuously improve or begin the cycle again (PDSA)?"

MORE ON CLINICAL "SCIENCE"

One place I wherein routinely hang out.

These people are hardcore. (I have to admit to some episodic "scientism" wariness when reading some of their content). Among other topics, there's an ongoing debate at SBM regarding the epistemological and utilitarian differences (if any) between "EBM" (Evidence-Based Medicine) and "SBM" (Science-Based Medicine). As we ponder expanded "CER" (Comparative Effectiveness Research) as envisioned to be facilitated by ever more widespread HIT adoption, I would expect to see some heated debate at SBM on the subject (CER is already much unloved in other curmudgeonly clinical circles).

___

BTW: ONC now has a blog.

Worth visiting and perusing.

QUICK UPDATE:

ANOTHER NICE RESOURCE I JUST FOUND AND JOINED

Very nice topical podcasts. Two thumbs up.

___

No comments:

Post a Comment