Begs a question or two, perhaps?

One of the most troubling issues around generative AI is simple: It’s being made in secret. To produce humanlike answers to questions, systems such as ChatGPT process huge quantities of written material. But few people outside of companies such as Meta and OpenAI know the full [sic] extent of the texts these programs have been trained on.

Some training text comes from Wikipedia and other online writing, but high-quality generative AI requires higher-quality input than is usually found on the internet—that is, it requires the kind found in books. In a lawsuit filed in California last month, the writers Sarah Silverman, Richard Kadrey, and Christopher Golden allege that Meta violated copyright laws by using their books to train LLaMA, a large language model similar to OpenAI’s GPT-4—an algorithm that can generate text by mimicking the word patterns it finds in sample texts. But neither the lawsuit itself nor the commentary surrounding it has offered a look under the hood: We have not previously known for certain whether LLaMA was trained on Silverman’s, Kadrey’s, or Golden’s books, or any others, for that matter.

Pirated books are being used as inputs for computer programs that are changing how we read, learn, and communicate. The future promised by AI is written with stolen words.

Upwards of 170,000 books, the majority published in the past 20 years, are in LLaMA’s training data. In addition to work by Silverman, Kadrey, and Golden, nonfiction by Michael Pollan, Rebecca Solnit, and Jon Krakauer is being used, as are thrillers by James Patterson and Stephen King and other fiction by George Saunders, Zadie Smith, and Junot Díaz. These books are part of a dataset called “Books3,” and its use has not been limited to LLaMA. Books3 was also used to train Bloomberg’s BloombergGPT, EleutherAI’s GPT-J—a popular open-source model—and likely other generative-AI programs now embedded in websites across the internet. A Meta spokesperson declined to comment on the company’s use of Books3…

OK. Very interesting Atlantic Monthly article. Worth your time.

Been quite the hot topic of late. Recall my post last December—"Malign Technoligies Update: AI Natural Language Generation (NLG). Who might have copyright ownership claims to AI-generated human-readable text?"

Comment I posted on another blog yesterday.

|

| Screenshot from my Kindle |

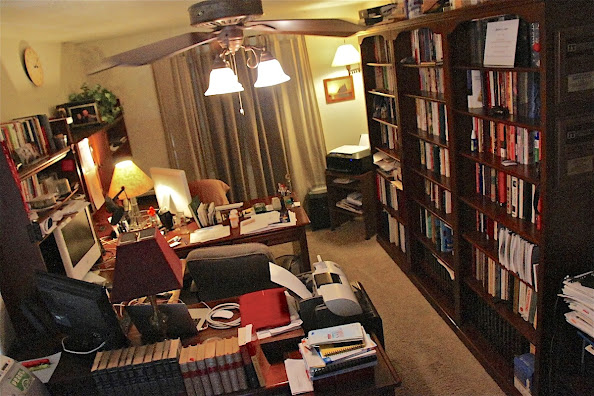

My Unsupervised Training Data.

Below, my Las Vegas loft library Data Warehouse in 2013.

I stlll miss that pad. We had crammed floor-to-ceiling bookcases all over the place. We've since given away about 90% of our hardcopy books. I'm getting to where I do much better with eBook & online reading as my old coot eyesight atrophies.

After getting my Master's, I taught adjunct evening faculty Critical Thinking and Argument Analysis from 1999 - 2004 at UNLV (during my bank risk analyst days). Back then, the academic plagiarism concerns were mostly focused on the Microsoft Word etc ease of Ctrl-C / Ctrl-V Cut & Paste. Now we have to wring hands as students can use ChatGPT to simply ghostwrite their assignments for them.

Beyond academia, the prospect now uneasily portends wherein AI quietly writes our news stories, magazine articles, books, screenplays, political speeches etc—well, in the case of Donald Trump, though, it'd certainly immeasurably add coherence:

Yeah, it's not funny.

So, back to the original riff here. Issues of IP "piracy," "copyright," "fair use" aside, how do my lifetime accrued "wetware" "training data" differ ethically? For the sake of argument, let's assume that these AI companies paid retail for every title available on Amazon (neutralizing the "piracy" beef) and then used the authors' prose simply as "training data," not publishing and disseminating verbatim "unauthorized copies?"

OK, backing up a tad in paraphrase: "To produce humanlike answers to questions, people like BobbyG process huge quantities of written material. But few people ... know the extent of the texts he has been trained on."

Now, I could never keep up with the computers. My consumption of "training data" would be nanoscopically puny by comparison, in terms of sheer volume. So, perhaps AI will soon be able to kick my nominally formidable verbal butt, in terms of both topical analytic acumen and creative elegance of rhetorical flourish (to the extent that I can be said to possess the latter competence).

I guess we'll know before long. Maybe. Color me a bit skeptical as yet.

Just wondering. What do you think? (LOL, it'd be funny if I got AI "responses" generated by people using ChatGPT.)

CODA

I guess I'm kinda strange. Unremarkable B student in high school in NJ (albeit a voracious reader from early on prior to HS). Left home at 18 in 1964 to go on the road with a bar band in lieu of college. Trapsed all over the U.S. and Canada. Got politicized in 1967 when I hit California for the first time. I joke that I "was the only rock & roll guitar player in the country with subscriptions to Harper's, The New Yorker, the Atlantic, Ramparts, The New Republic, The Washington Post, and The Washington Monthly." Just about all of my fellow musicians wanted only to jaw about other musicians and bands and their recordings, and axes and equipment.

I didn't really fit in. There was more important stuff out there.

Then, after going White Collar in the wake of finally getting my undergrad at the age of 39, I found it difficult to fully fit in with the "suits." The cubicle crowd didn't get me either.

I now just refer to myself as a "life-long unlearner."

Running outa time. There's just too much too reconsider. Sometimes the current relentless vulgar media absurdity gets away with me.

SATURDAY ERRATUM

Ugh. Morocco death toll will continue to mount. Terrible.

More to come...

__________

No comments:

Post a Comment